Architectural Overview¶

The Dynamic Multi-protocol Manger (DMM) is an arbiter of RF commands between multiple protocol stacks and the RF Driver. It controls the scheduling of RF commands by providing a set of hooks to the RF driver, replacing the default scheduling behavior.

DMM bases scheduling on the constraints and requirements set by the policy table and global priority table, and has the ability to re-order the RF command queue provided by the RF driver as needed. DMM also intercepts RF driver API calls and can, in certain scenarios, perform additional changes to the RF commands besides the scheduling order based on the current policy and scheduled stack activity.

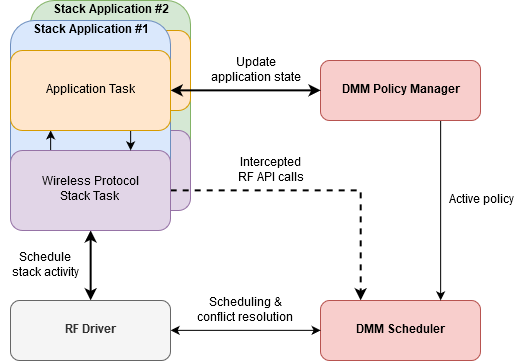

The diagram below shows how the DMM interacts with the protocol stacks and user application.

Figure 27. DMM architectural overview block diagram

The red blocks in the block diagram above are part of the DMM component. The other components (RF Driver, application task, protocol stack) would be present in a typical single mode application. From the diagram above, it can be seen that DMM is made up of two primary components:

- DMM scheduler

- DMM policy manager

The DMM scheduler is as a single component in the application. It keeps track of RF commands submitted by each stack and PHY switching events originating in the RF driver. The DMM policy manager knows the current state of each stack application as well as what scheduling parameters to use for a specific combination of application states. The scheduler gets information from the policy manager on how to perform scheduling of the associated stacks.

Initialization of the policy manager and scheduler happens in DMMPolicy_init() and DMMSch_init(). Before any of the modules are used, the modules need to be opened by calls to DMMPolicy_open() and DMMSch_open().

Setting up the antenna switching is important if one stack operates in the 2.4 GHz band while the other uses the Sub-1 GHz band. DMM is not able to directly control the antenna switching, but is typically handled by RF driver callbacks registered in the project’s board file.

The DMM scheduler software redefines some of the command execution APIs of the RF driver. Therefore, when using a function mapping table like below, no changes to the stack implementation would be needed.

#include <ti/dmm/dmm_scheduler.h>

#define RF_open DMMSch_rfOpen

#define RF_postCmd DMMSch_rfPostCmd

#define RF_runCmd DMMSch_rfRunCmd

#define RF_scheduleCmd DMMSch_rfScheduleCmd

#define RF_runScheduleCmd DMMSch_rfRunScheduleCmd

#define RF_cancelCmd DMMSch_rfCancelCmd

#define RF_flushCmd DMMSch_rfFlushCmd

#define RF_runImmediateCmd DMMSch_rfRunImmediateCmd

#define RF_runDirectCmd DMMSch_rfRunDirectCmd

The DMM scheduler does not redefine all RF APIs, most of them are left as is. Primarily, the DMM intercepts RF_open(). The DMM scheduler provides the RF driver hooks for scheduling and execution of RF commands.

When the scheduler hook is invoked from the RF driver, the DMM will update the priority based on the active policy and scheduled stack activity. It will then inspect the command and based on the active policy, it will either:

- Put the commands in the queue as is

- Put the command in the queue with a delayed start time

- Call conflict resolution hook if there is a conflict in time

- Cancel the command based on current policy

The execution hook is invoked from the RF driver when a command from the queue is about to be run. The DMM will at this point decide based on the current active policy and status whether to run the command or not. This includes solving potential conflict between commands and means it will either:

- Cancel the conflicting command

- Cancel a command if the stack has been blocked by the application

- Delay one of the conflicting commands

Note

For further information on how a stack may be blocked by the application, see the Block Mode section in the Application States and Scheduling Policies

A stack is registered by calling DMMSch_registerClient(). The DMM scheduler assumes that each stack operates in a separate TI-RTOS task. Since the remapped RF driver functions do not know anything about tasks, the task handle submitted during registration is later being used to deduce the current active stack in each function call.

The actual command scheduling is fully transparent for the application. It is only necessary to update the policy manager with the current application state. This is done using the DMMPolicy_updateStackState API.