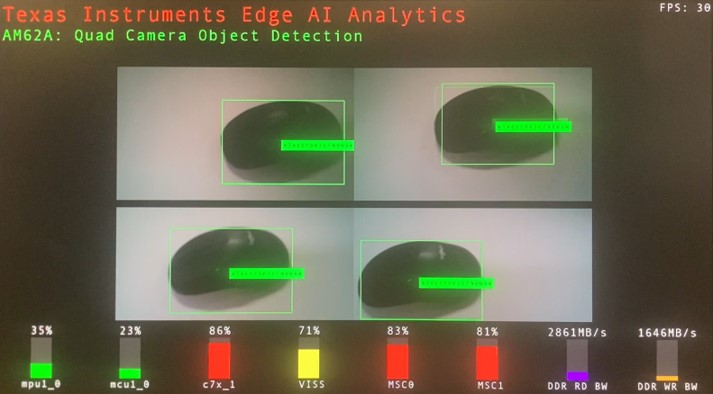

Texas Instruments

This demo implements multiple camera AI application on AM62A processors based on the V3Link Camera Solution Kit and 4x IMX2a9 cameras. The demo shows how AM62A processed the videos and implemented object detection models at 120 FPS with bandwidth for expansion.

Texas Instruments

This demo offers real-time vision-based people tracking with statistical insights such as total visitors, current occupancy, and visit duration. It also offers heatmap highlighting frequently visited areas. This demo has applications in areas such as retail, building automation, and security.

Texas Instruments

This demo shows single-camera depth estimation using a deep learning model. The MiDaS deep learning CNN gives relative depth information to distinguish people and objects from each other and backgrounds.